SENSEable Shoes – Hands-free and Eyes-free Mobile Interaction

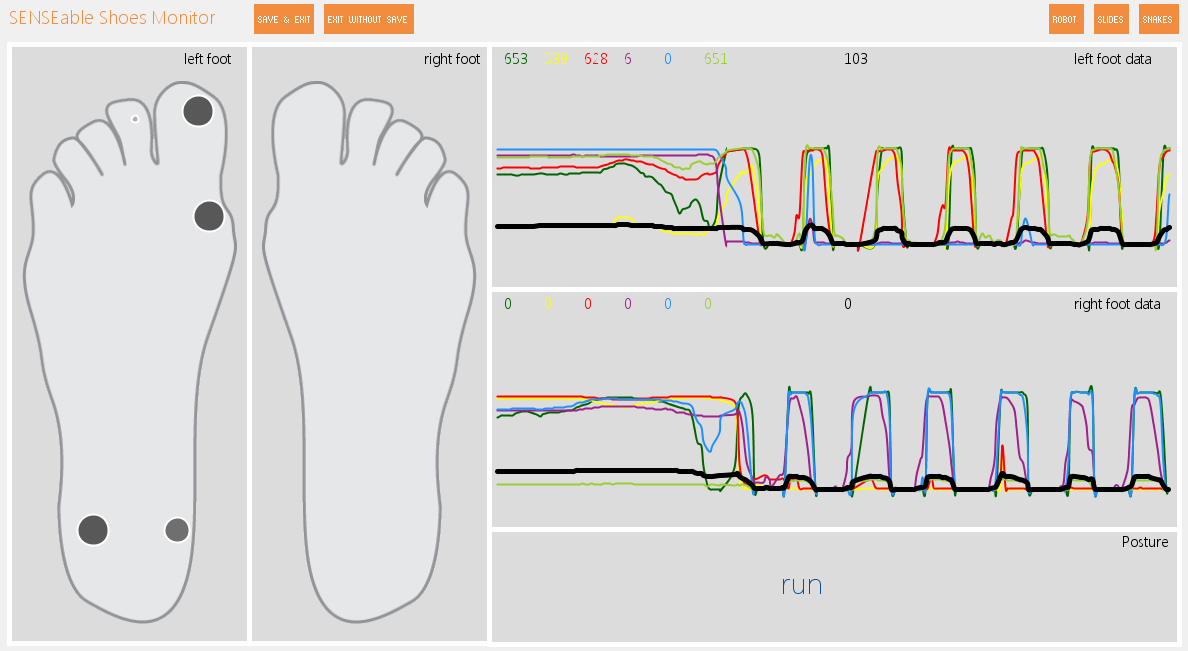

SENSEable Shoes is a hands-free and eyes-free foot-computer interface that supports on-the-go interaction with surrounding environments. We recognize different low-level activities by measuring the user’s continuous weight distribution over the feet with twelve Force Sensing Resistor (FSR) sensors embedded in the insoles of shoes. Using the sensor data as inputs, a Support Vector Machine (SVM) classifier identifies up to eighteen mobile activities and a four-directional foot control gesture at approximately 98% accuracy. By understanding user’s present activities and foot gestures, this system offers a nonintrusive and always-available input method. We present the design and implementation of our system and several proof-of-concept applications.

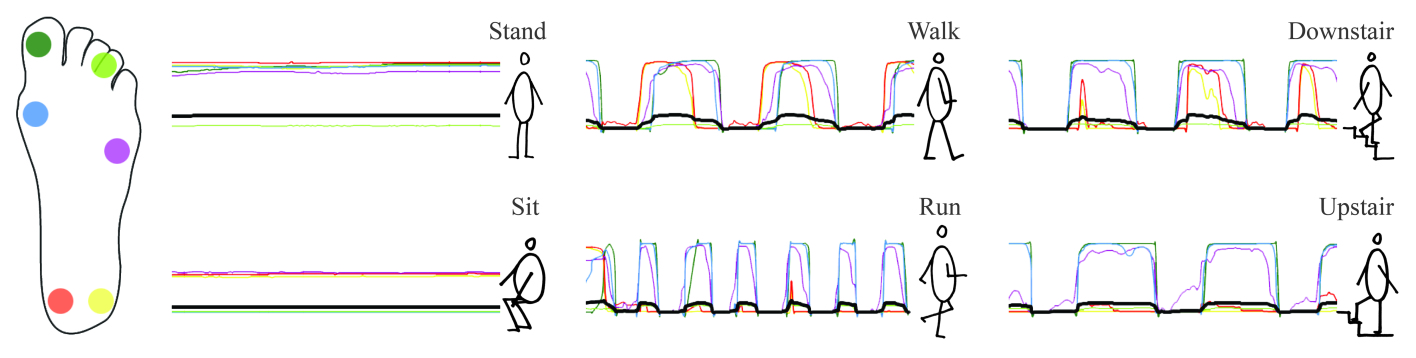

A person’s weight is not allocated symmetrically over the plantar. As the sole is not flat but arched, the weight mainly centers on the hallex, the first metatarse and the calcaneus. When sitting, the weight of a person’s upper body rest mostly on the chair and the weight on the feet is relatively small. When standing, the whole body’s weight is put evenly on both feet. Leaning left or right changes the weight distribution over the feet. When walking, the weight distribution changes with the pace; the weight on the front and rear part of the foot alternately increases and decreases because not all parts of the sole contact the ground at once. The changes in weight distribution on the feet reflect one’s activity, and different activities have different changes of weight distribution signatures.

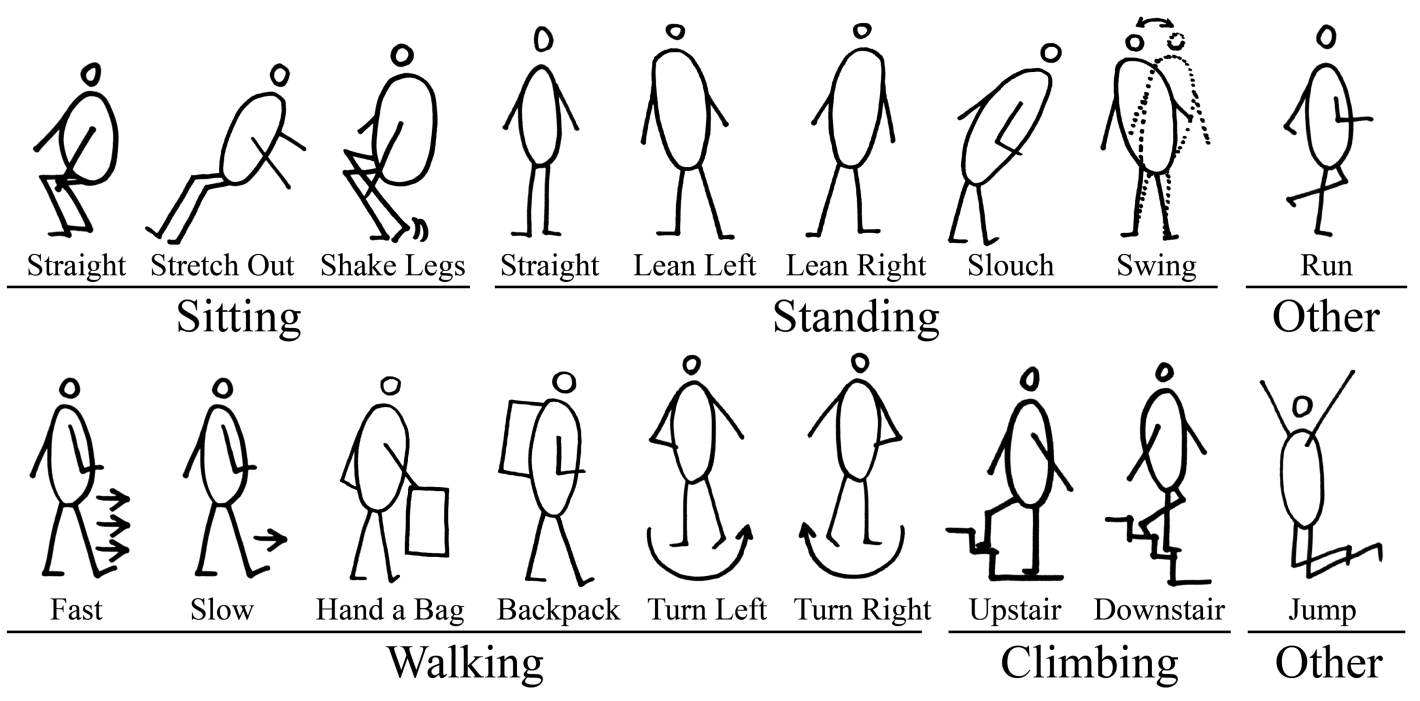

We observed people’s common low-level activities in a mobile context and classified them as static or dynamic. Static activities include sitting (with variations: sitting straight, stretching out and legs shaking) and standing (with variations: standing straight, leaning to left and right, swinging and slouching); for dynamic categories, we want to know walking (slow and fast, backing up and carrying a bag, turning left and right), running, jumping and climbing stairs (ups and down). Some activities are similar, such as sitting straight and stretching out; but distinguishing these minor differences helps us to know more about a user’s status in a mobile environment. For instance, sitting straight could imply the user is in a relatively serious environment while stretching out may suggest the user is in a relaxed environment.

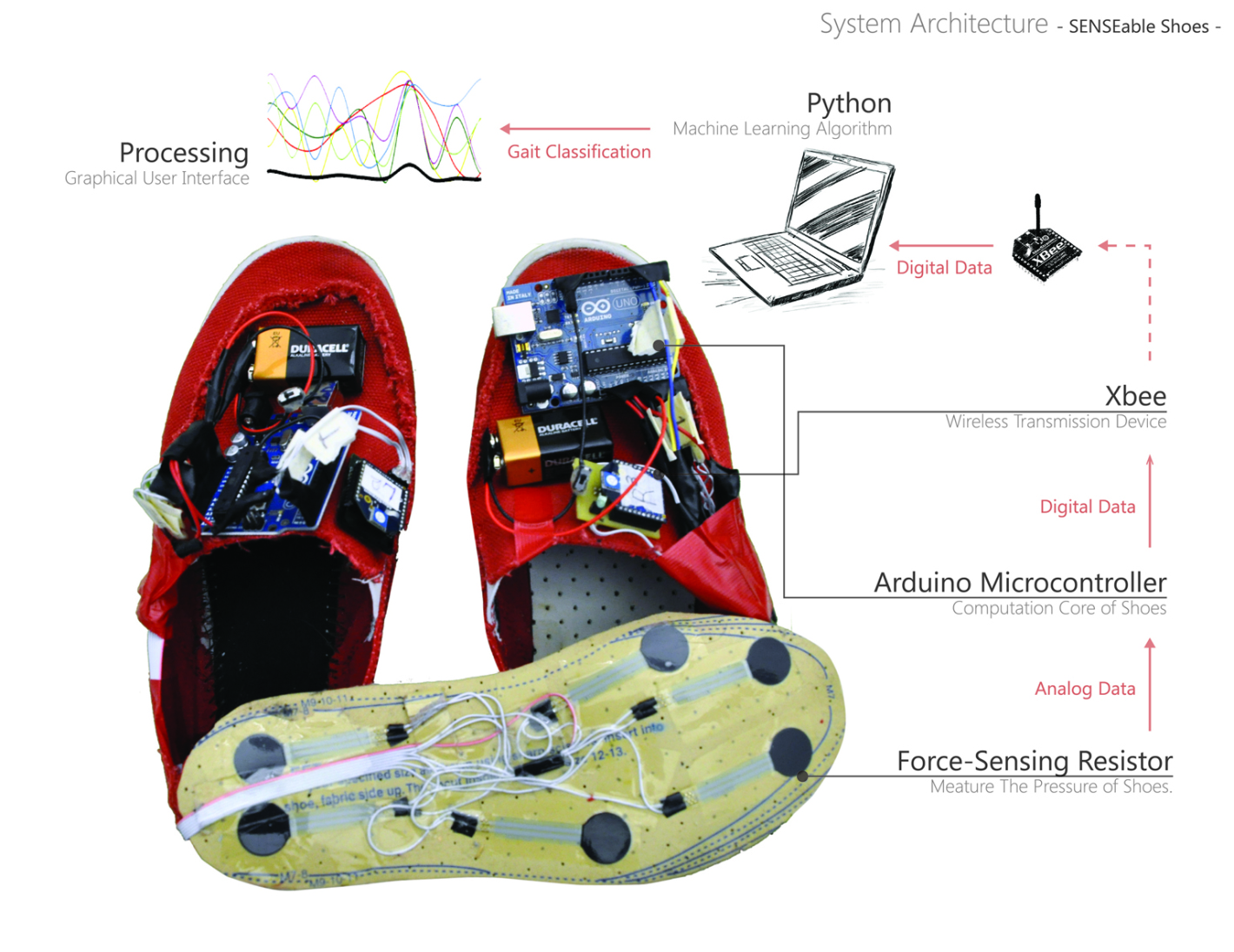

The hardware platform consists of a pair of ordinary canvas shoes, a pair of 4mm sponge insoles, six FSRs in each shoe with a round shape sensing area with a 12.7 mm diameter, two microcontrollers, two wireless transceivers and power supplies. The FSRs are mounted underneath the insole and wired to the microcontroller. The sensor signals are sent to Arduino UNO boards with ATmega328 microcontrollers, one for each foot, which are connected to Xbee wireless transceivers running the ZigBee protocol. Due to their size, boards are attached to the top of the shoes and powered by 9 Volt Alkaline batteries. Each microcontroller has six analog-to-digital channels that gather raw data from the six FSRs, which represent the pressure distribution under the insole. The data from each sensor are labeled and then transmitted to a laptop. A python program on the PC reads the raw data, uses a Support Vector Machine (SVM) classifier to identify activities and displays the data on a graphical user interface.

We implement a SVM classifier with python’s Orange API. New samples are passed to the trained classifier to predict activities. A ten-fold cross-validation is employed to estimate the accuracy. It separates the dataset into ten parts, chooses one part as testing set, and the rest as training set. Our tests achieved a 98% accuracy in overall performance. We also employ the same training procedure to classify simple four-directional foot gestures. These gestures are based on weight distribution over different parts of the right foot. They require only subtle foot movement and can barely be distinguished by a casual observer.

Created by:

Huaishu Peng & Yen-Chia Hsu.